There are so many benefits of AI in cybersecurity. But have you ever considered the disadvantages of AI in Cybersecurity?

What are the disadvantages of AI in security? There is not one but many.

For many years now, artificial intelligence has made cybersecurity tools better. Machine learning tools have made network security, anti-virus, and fraud detection software better because they can find problems faster than humans.

At the same time, AI has spelled danger in matters touching cyber security. AI-powered cyber attack examples are brute force, denial of service (DoS), and social engineering.

What are the disadvantages of machine learning in cybersecurity?

Some common problems that one could encounter while implementing ML for cybersecurity include The three most common challenges of ML in cybersecurity, which are described below. Bad quality or lack of data: One of the more important works in creating an ML model is using the training data to get the desired output.

Artificial intelligence is going to bring new risks with it once it is cheap and available, like possibly tricking ChatGPT into writing malicious code or a letter from Elon Musk requesting donations.

Many of these tools for deepfakes can also be applied to create breathtakingly convincing fake audio tracks or video clips using relatively scant training data.

The more users grow comfortable sharing sensitive information with AI, the more privacy concerns continue to rise.

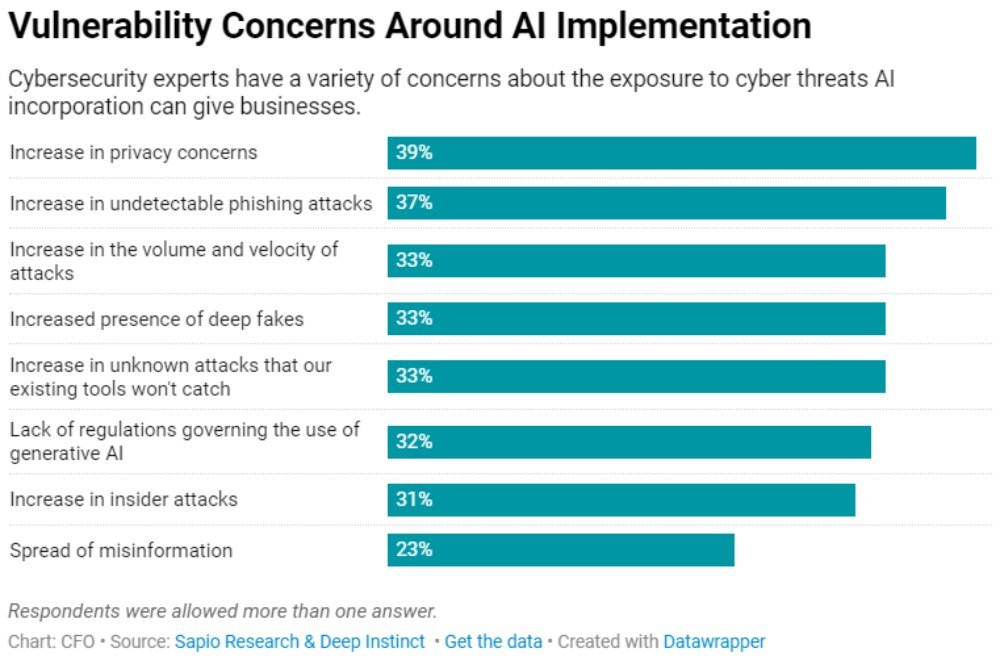

AI cyber attacks statistics (as of 2023):

So, let us discuss the various disadvantages of AI in Cybersecurity.

Disadvantages Of AI In Cybersecurity: Top Risks

AI, as with all technology, can be used for good or bad. Some AI tools developed for the very well-being of mankind can be used by threat actors to carry out fraud, scams, and all other kinds of cybercrime.

What are the problems with AI in cyber security?

Let us look at some of the risks of artificial intelligence in cyber security:

1. Optimization Of Cyber Attacks

Such models help attackers scale at an unseen level of speed and complexity.

The advances have been seen in being generative, and in this case, the ways attackers will now be undermining cloud complexity and how they ride on geopolitical tensions for their advanced attacks.

They may also tweak their ransomware and phishing attack techniques with generative AI.

2. Malware Automation

Powerful AI tools like ChatGPT could aid or replace software developers in a potentially equivalent or better quality of output manner, do accurate number crunching, and even code writing.

But even experts can work around AI protections by developing complex data theft executables. AI-powered tools of the future could do even better at creating.

3. Physical Safety Compromisation

The more systems that become dependent on AI—such as autonomous vehicle systems, manufacturing equipment, and construction equipment, or healthcare systems—the greater the possible artificial intelligence risks to physical safety.

For instance, it is dangerous if an actual self-driving car operated by AI faces a cyber-security breach and, in turn, threatens the physical safety of its passengers.

A cyber-physical equivalent is that an attacker at a construction site could rig the dataset for maintenance tools to trigger harmful conditions.

4. Privacy Risks

ChatGPT leaked parts of the chat history of other users in an embarrassing bug for the CEO of OpenAI, Sam Altman. Although the bug was fixed, there are other risks to privacy as a result of the large amount of data that AI crunches.

In this, for instance, hackers breaking into an AI system can be privy to quite several various kinds of sensitive information.

An AI system developed for marketing, advertising, profiling, or surveillance could also present privacy risks in ways that even George Orwell never conceived.

Already, in some countries, AI profiling technologies are used for the invasion of user privacy.

5. AI Model Stealing

Some of the risks related to the theft of AI models include network attacks, social engineering techniques, and vulnerability exploitation by threat actors, from state-sponsored agents to insider threats such as corporate spies and run-of-the-mill computer hackers.

Such stolen models can be manipulated and modified to assist the attacker in various malicious activities, compounding the risks of artificial intelligence to society.

6. Data Poisoning And Data Manipulation

It is, nevertheless, the very same powerful tool that AI is; though AI can be vulnerable to data manipulation.

AI often relies heavily on the training data. If the data is modified or poisoned, the AI-driven tool might generate unexpected, even malicious, results.

An attacker who can poison a training dataset with adversarial data may theoretically change the model’s outputs. Another subtle manipulation is the bias injection technique an attacker can apply.

Such attacks can be incredibly debilitating in industry domains of healthcare, automotive, and transportation.

7. Impersonation

Now, Hollywood uses AI to outsmart audiences in the documentaries of Roadrunner or in the event of digitally turning back time for Harrison Ford’s Indiana Jones and the Dial of Destiny with de-aging.

But AI is also used to fake virtual kidnappings, as in a parent’s nightmare in which her voice was replaced by a man who demanded a ransom.

Law enforcement believes that AI will also be used for impersonation fraud, including grandfather scams.

A generative AI model can also produce text in a thought leader’s voice for scams and thus generative AI security risks are high.

8. Sophisticated Attacks

It is worth noting that threat actors can use advanced AI to develop sophisticated malware, impersonate others in scams, and poison AI training data.

AI allows them to carry on with phishing attacks, malware, and credential-stuffing attacks automatically. AI can also be leveraged to help attacks evade security systems like voice recognition software in adversarial attacks.

9. Reputation Damage

If an AI-operating organization faces problems with technology performance or falls victim to a cyber incident related to data loss, this results in a reputational loss. This can be translated into monetary fines, civil sanctions, and spoiled client relations for organizations.

How Will AI Affect The Cybersecurity Job Market?

AI adoption in cybersecurity requires a skilled workforce competent in developing, implementing, and managing AI systems.

Organizations have a huge need for professionals in the cybersecurity area who would not only understand AI technologies but also cope with the related threats and issues.

Is AI a threat to cyber security jobs?

Of primary concern here is the likely general displacement of workers and unemployment due to AI and automation.

As AI becomes more established, most jobs and human functions run the risk of being automated, leaving a vast majority without jobs and constantly worried about the security of their careers.

Related Content: WHAT ARE THE ADVANTAGES OF AI IN CYBERSECURITY

Final Thoughts

While AI has very great power to strengthen cyber defenses, its weaknesses and attendant risks have to be recognized.

The disadvantages of AI in cybersecurity, or the potential risks, such as algorithmic biases, susceptibility to adversarial attacks, and the human element in decision-making, point to the fact that prudence must be observed in implementation and that such implementation must be continuously monitored.

These drawbacks should be understood and worked on proactively so organizations can more effectively navigate the evolving threat landscape.

The balancing act in exploiting the potentials of AI while at the same time minimizing its downsides will be to design a robust cybersecurity strategy that can protect sensitive data and digital infrastructure from burgeoning threats.

You May Like Also: